In a breakthrough for accessibility and communication, a team of MIT students has developed revolutionary smart gloves that can translate American Sign Language (ASL) into spoken words in real time.

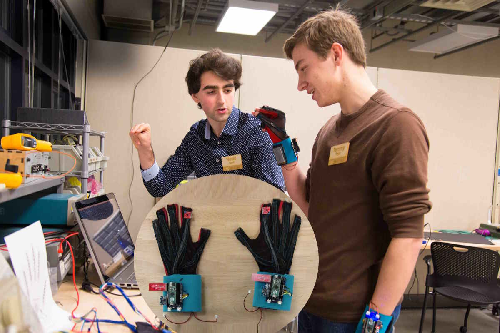

Named “SignSpeak”, the gloves are embedded with advanced motion sensors, AI-driven pattern recognition, and lightweight speakers. When worn, the gloves capture hand gestures and finger movements with incredible precision. The built-in software then processes the signs and converts them instantly into clear, spoken language through a connected device or mobile app.

The innovation aims to bridge the communication gap between the deaf or hard-of-hearing community and those unfamiliar with sign language. It could be a game-changer for real-world conversations in schools, hospitals, workplaces, and public spaces.

“Our goal was to make technology truly inclusive,” said lead developer Aria Chen, a computer engineering student at MIT. “This is not just about convenience — it’s about dignity and equal access to everyday conversations.”

The gloves also support multiple languages and dialects through cloud-based updates, and the team is already working to expand support for global sign language systems.

The project has drawn attention from tech investors and accessibility advocates worldwide, with discussions underway for large-scale production and nonprofit distribution in underserved communities.

If adopted globally, SignSpeak could redefine assistive technology and bring millions into more connected conversations — without needing an interpreter.